On the biased Two-Parameter Estimator to Combat Multicollinearity in Linear Regression Model

Keywords:

Multicollinearity, Ordinary least-squares, Simulation, Biased two-parameter, Ridge regression estimatorAbstract

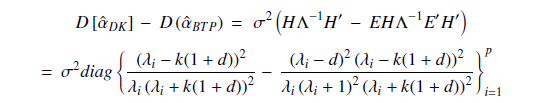

The most popularly used estimator to estimate the regression parameters in the linear regression model is the ordinary least-squares (OLS). The existence of multicollinearity in the model renders OLS inefficient. To overcome the multicollinearity problem, a new two-parameter estimator, a biased two-parameter (BTP), is proposed as an alternative to the OLS. Theoretical comparisons and simulation studies were carried out. The theoretical comparison and simulation studies show that the proposed estimator dominated some existing estimators using the mean square error (MSE) criterion. Furthermore, the real-life data bolster both the hypothetical and simulation results. The proposed estimator is preferred to OLS and other existing estimators when multicollinearity is present in the model.

Published

How to Cite

Issue

Section

Copyright (c) 2022 Janet Iyabo Idowu, Olasunkanmi James Oladapo, Abiola Timothy Owolabi, Kayode Ayinde

This work is licensed under a Creative Commons Attribution 4.0 International License.